x86 시스템을 운영하면서 과도한 네트워크 부하가 발생하게 되면 해당 서비스를 진행하는 이더넷

인터페이스 에서는 Packet Overrun이 발생될수 있다. 패킷 Overrun 이 발생되면, incomming/Outgoing 되는 패킷들의 Frame Loss 가 발생되기 때문에 여러모로 모니터링이 필요한

항목이기도 하다.

1. 패킷 Overrrun의 원인

지금까지 프레임 손실 (Frame Loss) 에 대한 가장 일반적인 이유는 큐 오버런입니다.커널은 큐의 길이에 제한을 설정하고 있기 때문에 때로는 대기열의 배수보다 더 빨리 채워집니다. 이 현상이 오래 지속이 되면 Frame Loss 가 발생되는 것입니다.

[root@/ ~]# ip -s -s link ls p4p2

11: p4p2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq state UP qlen 1000

link/ether 00:10:18:ce:0c:0e brd ff:ff:ff:ff:ff:ff

RX: bytes packets errors dropped overrun mcast

3405890854 1534610082 146442 0 146442 13189

RX errors: length crc frame fifo missed

0 0 0 0 0

TX: bytes packets errors dropped carrier collsns

3957714091 1198049468 0 0 0 0

TX errors: aborted fifo window heartbeat

0 0 0 0

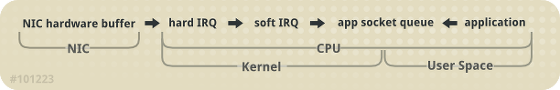

2. Network Recive Path Diagram

네트워크의 패킷을 받아들이는데 경로 다이아그램 (Path Diagram)을 이해한다면 장애처리시 물리적인 장치에 대한 Trouble Shooting 이나 운영체제 대한 트러블 슈팅에 대한 판단을 쉽게 진행할수 있다.

리눅스 커널은 패킷을 받아들이는데 위의 그림과 같이 기본적으로 4가지 경로를 거치게 된다.

-

Hardware Reception: the network interface card (NIC) receives the frame on the wire. Depending on its driver configuration, the NIC transfers the frame either to an internal hardware buffer memory or to a specified ring buffer.

-

Hard IRQ: the NIC asserts the presence of a net frame by interrupting the CPU. This causes the NIC driver to acknowledge the interrupt and schedule the soft IRQ operation.

-

Soft IRQ: this stage implements the actual frame-receiving process, and is run insoftirq context. This means that the stage pre-empts all applications running on the specified CPU, but still allows hard IRQs to be asserted.

In this context (running on the same CPU as hard IRQ, thereby minimizing locking overhead), the kernel actually removes the frame from the NIC hardware buffers and processes it through the network stack. From there, the frame is either forwarded, discarded, or passed to a target listening socket.

When passed to a socket, the frame is appended to the application that owns the socket. This process is done iteratively until the NIC hardware buffer runs out of frames, or until the device weight ( dev_weight). For more information about device weight, refer to Section 8.4.1, “NIC Hardware Buffer”

-

Application receive: the application receives the frame and dequeues it from any owned sockets via the standard POSIX calls ( read, recv, recvfrom). At this point, data received over the network no longer exists on the network stack

5. CPU Affinity

To maintain high throughput on the receive path, it is recommended that you keep

the L2 cache hot. As described earlier, network buffers are received on the same CPU as the IRQ that signaled their presence. This means that buffer data will be on the L2 cache of that receiving CPU.

To take advantage of this, place process affinity on applications expected to receive the most data on the NIC that shares the same core as the L2 cache. This will maximize the chances of a cache hit, and thereby improve performance.

3 운영체제 입장에서의 Work Arround

Queu Overrun이 발생되면 H/W의 Buffer 상태를 check하여 Frame Loss 패킷의 증가가 있는지 확인.

[root@linux] ethtool -S etho | grep frame

rx_frame_error = 0

만약 위의 항목중 rx_frame_error 발생하게 된다면 아래와 같이 진행한다.

Replace ethX with the NIC's corresponding device name. This will display how many frames have been dropped within ethX. Often, a drop occurs because the queue runs out of buffer space in which to store frames.

There are different ways to address this problem, namely:

Input traffic

You can help prevent queue overruns by slowing down input traffic. This can be achieved by filtering, reducing the number of joined multicast groups, lowering broadcast traffic, and the like.

Queue length

Alternatively, you can also increase the queue length. This involves increasing the number of buffers in a specified queue to whatever maximum the driver will allow. To do so, edit the rx/ tx ring parameters of ethX using:

ethtool --set-ring ethX

Append the appropriate rx or tx values to the aforementioned command. For more information, refer to man ethtool.

Device weight

You can also increase the rate at which a queue is drained. To do this, adjust the NIC's device weight accordingly. This attribute refers to the maximum number of frames that the NIC can receive before the softirq context has to yield the CPU and reschedule itself. It is controlled by the

/proc/sys/net/core/dev_weight variable.

Most administrators have a tendency to choose the third option. However, keep in mind that there are consequences for doing so. Increasing the number of frames that can be received from a NIC in one iteration implies extra CPU cycles, during which no applications can be scheduled on that CPU.

참고문서

Issue

- A NIC shows a number of overruns in ifconfig output as example below:

eth0 Link encap:Ethernet HWaddr D4:AE:52:34:E6:2E UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1 >> RX packets:1419121922 [errors:71111] dropped:0 [overruns:71111] frame:0 << TX packets:1515463943 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:864269160026 (804.9 GiB) TX bytes:1319266440662 (1.1 TiB) Interrupt:234 Memory:f4800000-f4ffffff

Environment

- Red Hat Enterprise Linux

Resolution

It is not a problem related with OS. So, this kind of problem is related with infra-structure of a environment, in other words, the network used here seems not support the traffic demand that is necessary.

Workaround

We can use the following steps to try fix this kind of issue.

1 - First of all, we are going to set the network device to work with Jumbo Frame, in other words, improve the MTU size (size of fragmentation packages) that are running in this device.

1.1 - Edit the /etc/sysconfig/network-scripts/ifcfg-ethX file and insert the following parameter: MTU=9000

With the parameter above, the interface and alias will set the value for MTU.

Now, for that settings are applied.

2 - Let's change the Ring Buffer for the highest supported value:

2.1 - Check the values that are set (Current hardware settings:) and What the max value acceptable (Pre-set maximums:) as the example below:

# ethtool -g eth0 Ring parameters for eth0: Pre-set maximums: RX: 4096 RX Mini: 0 RX Jumbo: 0 TX: 4096 Current hardware settings: RX: 256 RX Mini: 0 RX Jumbo: 0 TX: 256 # ethtool -G eth0 rx 4096 tx 4096 # ethtool -g eth0 Ring parameters for eth0: Pre-set maximums: RX: 4096 RX Mini: 0 RX Jumbo: 0 TX: 4096 Current hardware settings: RX: 4096 RX Mini: 0 RX Jumbo: 0 TX: 4096

Above, through the command # ethtool -G eth0 rx 4096 tx 4096 we set the Ring Buffer for the max size supported on my device.

This command line have to be inserted in /etc/rc.local for that can be persistent in case of reboots, because, there are no ways to set this parameter in own device.

3 - By default, some parameters of "auto-tuning" are not set in Linux and, the default size of TCP buffer is very small initially. For 10Gb devices, generaly set the Buffer value to 16Mb is a recommended value. Values above this are only for devices with the capacity greater than 10Gb.

3.1 - Edit the /etc/sysctl.conf file and add the following lines at the end of file:

# Improving the max buffer size TCP for 16MB net.core.rmem_max = 16777216 net.core.wmem_max = 16777216 # Improving the limit of autotuning TCP buffer for 16MB net.ipv4.tcp_rmem = 4096 87380 16777216 net.ipv4.tcp_wmem = 4096 65536 16777216 # Max Number of packages that are queued when the # interface receive the packages faster than the kernel # can support net.core.netdev_max_backlog = 250000

Save file and exit.

3.2 - Check what is the collision control algorithm used in yout environment: # sysctl -a | grep -i congestion_control

By default, RHEL uses 'bic' algorithm

# sysctl -a | grep -i congestion_control net.ipv4.tcp_congestion_control = bic

For this kind of cases of NICs with high speed, is recommended use algorithms as the 'cubic' ou 'htcp'. For some versions of RHEL 5.3 - 5.5 (Kernel 2.6.18) there is a bug that is being fixed with the 'cubic'. So, is recommended use initially the 'htcp' algorithm and check the performance.

At /etc/sysctl.conf file, add the following parameter:

net.ipv4.tcp_congestion_control=htcp

Save file and exit

After we did this, apply the changes made in the sysctl.conf file with the following command: # sysctl -p

Root Cause

Overrun is a number of times that a NIC is unable to transmit the received data in buffer, because the transfer rate of Input exceeded the environment capacity to receive the data. It usually is a signal of excessive traffic.

Each interface has two buffers (queues) with a determinated size, one for transmit data and other to receive data (packages). When one of these queues 'fill', the surplus packages are discarded as 'overruns'. In this case, the NIC is trying receive or transmit more packages than the environment can support.

Diagnostic Steps

- The output of ifconfig showing 'errors' and 'overruns' packages.

-

There is an article in cisco.com explaining more about the network issues:

Troubleshooting Ethernet - Table 4-6 show interfaces ethernet Field Descriptions

overrun: Shows the number of times that the receiver hardware was incapable of handing received data to a hardware buffer because the input rate exceeded the receiver's capability to handle the data.

출처: https://ssambback.tistory.com/entry/패킷-Overrun-으로-인한-Frame-loss [Rehoboth.. 이곳에서 부터]

'시스템' 카테고리의 다른 글

| Docker Volume (0) | 2020.03.20 |

|---|---|

| 우분투 ipv6 비활성화 (0) | 2020.01.02 |

| 리눅스 계정 잠금 임계값 설정 (0) | 2019.11.11 |

| 리눅스 shadow 구조 (0) | 2019.11.11 |

| 리눅스 서버 60초안에 상황파악하기 (0) | 2019.10.01 |